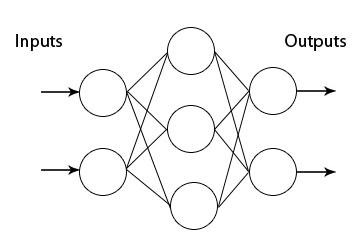

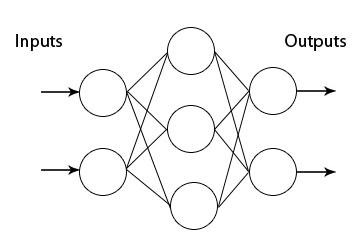

Multi Layer perceptron (MLP) is a feedforward neural network with one or more layers between input and output layer. Feedforward means that data flows in one direction from input to output layer (forward). This type of network is trained with the backpropagation learning algorithm. MLPs are widely used for pattern classification, recognition, prediction and approximation. Multi Layer Perceptron can solve problems which are not linearly separable.

To create and train Multi Layer Perceptron neural network using Neuroph Studio do the following:

Step 1. Create Neuroph project.

Click File > New Project.

Select Neuroph Project, and click Next.

Enter project name and location, click Finish.

Project is created, now create neural network.

Step 2. Create Multilayer Perceptron network.

Click File > New File

Select project from Project drop-down menu, select Neural Network file type, click next.

Enter network name, select Multi Layer Perceptron network type, click next.

Enter number of input neurons (2), number of hidden neurons (3) and number of output neurons (1) in each layer. Leave the Use Bias Neurons box checked. Choose transfer function Sigmoid or Tanh from drop down menu, for learning rule choose Backpropagation and click Create button.

This will create the Multi Layer Perceptron neural network with two neurons in input, three in hidden and one in output layer. All neurons will have Tanh transfer functions.

Now we shall train this network to learn logical XOR function. We'll create new training set according to XOR truth table.

Step 3. Next, create training set. In main menu click File > New File to open training set wizard.

Select Data set file type, then click next.

Enter training set name, choose Supervised for training set type from drop down list, enter number of inputs and outputs as shown on picture below and click Next button.

Then create training set by entering training elements as input and desired output values for neurons in input and output layer respectively. Use Add row button to add new elements, and click OK button when finished.

Step 4. Training network.To start network training procedure, drag n' drop training set to corresponding field in the network window, and 'Train' button will become enabled in toolbar. Click the 'Train' button to open Set Learning Parameters dialog.

In Set Learning parameters dialog use default learning parameters, and just click the Train button.

Training stopped after 1700 iterations with total net error under 0.01. Try using different transfer function and learning rate and observe the results.

Step 5. Testing trained network. After the training is complete, you can use Test and SetIn buttons to test the network behaviour.

In Set Network Input Dialog you can enter input values for network separated with spaces.

The result of the network test is shown on picture below.

Value of output neuron is close to 1, which is the desired output for the given input. The small difference represents the acceptable error.

Right click editor and choose Display Properties to set different visualization options (see picture below).

You can now experiment with different combinations of transfer function and learning rule, while creating new MLP network. For example, choose Backpropagation with Momentum for Learning Rule.

Create the same training set (according to XOR truth table) and click train button.

In Set Learning parameters dialog for Momentum use 0.1, and click the Train button.

Training stopped after just 32 iterations with total net error under 0.01

Test network using Set Input for the same values (0 1) as in previous example.

The result of network test is shown on picture below.

Value of output neuron is close to 1, which is the desired output for the given input. The small difference represents the acceptable error.

package org.neuroph.samples;

import java.util.Arrays;

import org.neuroph.core.NeuralNetwork;

import org.neuroph.nnet.MultiLayerPerceptron;

import org.neuroph.core.data.DataSet;

import org.neuroph.core.data.DataSetRow;

import org.neuroph.util.TransferFunctionType;

/**

* This sample shows how to create, train, save and load simple Multi Layer Perceptron

*/

public class XorMultiLayerPerceptronSample {

public static void main(String[] args) {

// create training set (logical XOR function)

DataSet trainingSet = new DataSet(2, 1);

trainingSet.addRow(new DataSetRow(new double[]{0, 0}, new double[]{0}));

trainingSet.addRow(new DataSetRow(new double[]{0, 1}, new double[]{1}));

trainingSet.addRow(new DataSetRow(new double[]{1, 0}, new double[]{1}));

trainingSet.addRow(new DataSetRow(new double[]{1, 1}, new double[]{0}));// create multi layer perceptron

MultiLayerPerceptron myMlPerceptron = new MultiLayerPerceptron(TransferFunctionType.TANH, 2, 3, 1);

// learn the training set

myMlPerceptron.learn(trainingSet);// test perceptron

System.out.println("Testing trained neural network");

testNeuralNetwork(myMlPerceptron, trainingSet);// save trained neural network

myMlPerceptron.save("myMlPerceptron.nnet");// load saved neural network

NeuralNetwork loadedMlPerceptron = NeuralNetwork.createFromFile("myMlPerceptron.nnet");// test loaded neural network

System.out.println("Testing loaded neural network");

testNeuralNetwork(loadedMlPerceptron, trainingSet);}

public static void testNeuralNetwork(NeuralNetwork nnet, DataSet testSet) {

for(DataSetRow dataRow : testSet.getRows()) {nnet.setInput(dataRow.getInput());

nnet.calculate();

double[ ] networkOutput = nnet.getOutput();

System.out.print("Input: " + Arrays.toString(dataRow.getInput()) );

System.out.println(" Output: " + Arrays.toString(networkOutput) );}

}

}

To learn more about the Multi Layer Perceptrons and Backpropagation (learning rule for Multi Layer Perceptron) see:

http://www.learnartificialneuralnetworks.com/backpropagation.html

http://en.wikipedia.org/wiki/Multilayer_perceptron

http://en.wikipedia.org/wiki/Backpropagation