|

Synergy of Neuroph framework and RapidMiner By Vera Stojanovic, Faculty of Organization Sciences, University of Belgrade A project for Intelligent Systems course

1. Introduction RapidMiner is the leading open-source system for data mining. It is available as a stand-alone application for data analysis and as a data mining engine for the integration into own products. The idea of integration of Neuroph into RapidMiner comes from the analysis of potential synergy and mutual benefits of each of the frameworks. Neuroph framework offers a wide range of architectures and customization of NN model. Such availability would be a valuable addition to the RapidMiner toolbox. RapidMiner has systematic evaluation tools, like cross-validation, leave-one-out, bootstrapping, and others. Furthermore it has different parameter optimization procedures, which are quite useful in an application scenario. Finally, it has possibility to compare the performance of the build model against other available algorithms, especially because of the difference in data formats and evaluation procedures in general. These potentials in RapidMiner and Neuroph open source frameworks are leveraged in their synergy, NeurophRM, that allows users to define customized neural networks in Neuroph, save the definitions in application specific format .nnet file, and use such neural network model definition to train, use and test within RapidMiner. In this experiment it will be shown how user can make preprocessing of the pictures in RapidMiner before analyzing and also it will be shown comparison of NeurophRM with other operators from RapidMiner. After testing it will be shown experimental results and discuss the result which is given from RapidMiner's operator and others which is given from NeurophRM. The goal is to see how these two frameworks can cooperate and also compare the results of operators.

2. Introduction to the problem In the beginning we choose 5 pictures on which will be done preprocessing 4 times. Those 5 simple pictures can be downloaded here. After preprocessing there are 25 example sets. Those example sets can be downloaded here. Example set consist 5 original photos and 20 photos where the preprocessing is done. RapidMiner plugin for image recognition enables preprocessing of choosen photos. That plugin has operators which help you in processing pictures. One of the operator which is used in this testing is Histogram Equalizer (Figure 1.), operator which equalizes histogram of the input image. The other one is Gaussian Blur (Figure 2.), operator which is an image space effect that is used to create a softly blurred version of the original image. As it is already noted a number of RapidMiner plugin operators that can help you do preprocessing pictures is really huge, so you can get a lot of variations of one image. Variation of one image represents one class in dataset. After making a lot of variations of few pictures (in this case 5) you can see how "good" operators will recognize is random choosen picture belongs to a particular class.

Figure 1: Use of Gaussian Blur in processing pictures

Figure 2: Use of Histogram Equalizer in processing pictures Now you can see how picture looks before and after Gaussian Blur, for example. (Figure 3. and Figure 4.)

Figure 3: Picture before Gaussian Blur

Figure 4: Picture after Gaussian Blur Furthermore, in experiment was made dataset in Neuroph open-source framework using those 25 example sets. Image recognition with neural networks howto shows steps of making dataset in Neuroph open-source. RapidMiner do not have same format comparing with dataset which is used in NeurophRM. A dataset which is made in Neuroph has .tset extension and RapidMiner works with datasets that have .txt extension. In addition, it is needed to make .txt from .tset, which can be used in RapidMiner. Class TrainingSet in Neuroph has method saveAsText, which transform dataset from Neuroph in dataset with .txt extension. (Figure 5.)

Figure 5: Transforming dataset which have .tset extension into .txt which can be used in RapidMiner

Now, it exist dataset that can be used in RapidMiner. Furthermore, two different processes were made in RapidMiner, one with RapidMiner's operators and the other one with Neuroph Classification NN whitch represents operator inside NeurophRM. Both processes have reading CSV and Validation. Reading CSV reads dataset. Validation operator divide example set on training and testing part. It random choose some sampling and trains a model on these samples. The remaining samples build a test set on which the model is evaluated. In Validation we put operators for classification and in training part apply model with performance. (Figure 6. and Figure 7.)

Figure 6: First part of process: Read CSV and Validation

Figure 7: Second part of process in which dataset was evaluated with DecissionTree

As for the Rapid's operators we use DecisionTree (Figure 7.), k-NN and NaiveBayes. And as for NeurophRM we use Neuroph Classification NN operator. (Figure 8.) DecisionTree is an operator which order to classify an example set, the tree is traversed bottom-down. Every node in a decision tree is labeled with an attribute. The example's value for this attribute determines which of the outcoming edges is taken. Roughly speaking, the tree induction algorithm works as follows. Whenever a new node is created at a certain stage, an attribute is picked to maximize the discriminative power of that node with respect to the examples assigned to the particular subtree. This discriminative power is measured by a criterion which can be selected by the user. k-NN is an operator which measure accuracy of k nearest neighbor implementation. NaiveBayes is an operator which returns classification model using estimated normal distributions. Neuroph Classification NN operator train Neural network defined in the Neuroph framework, and loaded from .nnet file.

Figure 8: Process with Neuroph Classification NN

It is mentioned earlier that for Neuroph Classification NN we need .nnet file from Neuroph. Now follow the steps of making .nnet. First we create NewNeuralNetwork. (Figure 9.)

Figure 9: Creating a NeuralNetwork

Furthermore, we choose Multi Layer Perceptron.( Figure 10.)

Figure 10: Choosing Multi Layer Perception

In finally, parameters for neural network are set. The number of input neurons is 1200 because dataset has 1200 attributes and also it has 5 classes that represent output. (Figure 11.)

Figure 11: Parameters of neural network

3. Preliminary experimental results Preliminary results show that NeurophRM gives good result comparing with RapidMiner's operators. These results show that synergy of RapidMiner and Neuroph open source framework allows comparison of neural network with other approaches.

Figure 12: Comparation of accuracy

DecisionTree gives 53,30% accuracy. (Figure 12.) On picture bellow it can be seen that Decission tree can successfully predict that

In the final right column (class precision) you can see that class II has the best prediction, even 81,82%. And final accuracy of DecisionTree is 53,30%.

Figure 12: Performance of DecisionTree

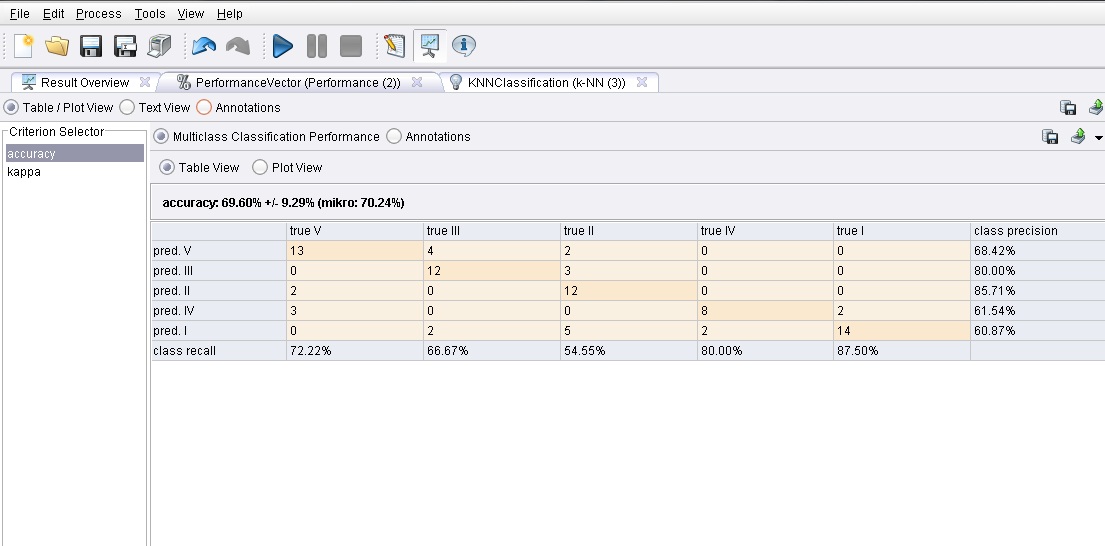

K-NN gives 69,60% accuracy. (Figure 13.)

Figure 13: Performance of k-NN

NaiveBayes gives 32,89% accuracy. (Figure 14.)

Figure 14: Performance of NaiveBayes

NeurophRM gives 63,80% accuracy. (Figure 15.)

Figure 15: Performance of NeurophRM

4.Discussion After these results, it can be seen that NeurophRM can be compared with others algorithms that are available in RapidMiner. In the introduction it was highlighted that RapidMiner has systematic evaluation tools, also it has different parameter optimization procedures and finally, it has the possibility to compare the performance of the build model against other available algorithms. Some of those strengths are shown in this experiment. And there results show that both RapidMiner and Neuroph can benefit from the integration of there frameworks. References

Download:

|